|

| Kerry Johnson |

Enabling apps for the car

Almost every consumer who owns a smart phone or tablet is familiar with the app experience: you go to an online marketplace, find apps of interest, and download them onto your device. With the HTML5 SDK, the automotive team at QNX is creating an analogous experience for the car.

Just as Apple, Android, and RIM provide SDKs to help vendors develop apps for their mobile platforms, QNX has created an SDK to help vendors to build apps for the QNX CAR 2 application platform. The closest analogies you will find to our HTML5 SDK are Apache Cordova and PhoneGap, both of which provide tools for creating mobile apps based on HTML5, CSS, JavaScript, and other web technologies.

App developers want to see the largest possible market for their apps. To that end, QNX also announced today that it will participate in the W3C’s Web and Automotive Workshop. The workshop aims to achieve industry alignment on how HTML5 is used in the car and to find common interfaces to reduce platform fragmentation from one automaker to the next. Obviously, app developers would like to see a common auto platform, while automakers want to maintain their differentiation. Thus, we believe the common ground achieved through W3C standardization will be important.

It bears mentioning that, unlike phone and tablet apps, car apps must offer a user experience that takes driver safety into consideration. This is a key issue, but beyond the scope of this post, so I won’t dwell on it here.

So what’s in the SDK, anyway?

As in any SDK, app developers will find tools to build and debug applications, and APIs that provide access the underlying platform. Specifically, the SDK will include:

- APIs to access vehicle resources, such as climate control, radio, navigation, and media playback

- APIs to manage the application life cycle: start, stop, show, hide, etc.

- APIs to discover and launch other applications

- A packaging tool to combine application code (HTML, CSS, JavaScript) and UI resources (icons, images, etc.) with QNX CAR APIs to create an installable application – a .bar file

- A emulator for the QNX CAR 2 platform to test HTML5 applications

- Oh yeah, and documentation and examples

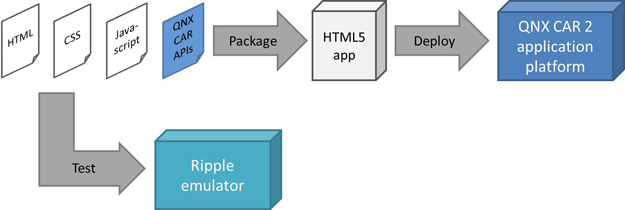

The development and deployment flow looks something like this:

Emulator and debugging environment

The QNX automotive team has extended the Ripple emulator environment to work with the QNX CAR 2 application platform. Ripple is an emulation environment originally designed for BlackBerry smart phones that RIM has open sourced on github.

Using this extended emulator, application developers can test their applications with the correct screen resolution and layout, and watch how their application interacts with the QNX CAR 2 platform APIs. For example, consider an application that controls audio in a car: balance, fade, bass, treble, volume, and so on. The screenshot below shows the QNX CAR 2 screen for controlling these settings in the Ripple emulator.

Using the Ripple emulator to test an audio application. Click to magnify.

In this example, you can use the onscreen controls to adjust volume, bass, treble, fade, and balance; you can also observe the changes to the underlying data values in the right-hand panel. And you can work the other way: by changing the controls on the right, you can observe changes to the on-screen display. The Ripple interface supports many other QNX CAR 2 features; for examples, see the QNX Flickr page.

You can also use the emulator in conjunction with the Web Inspector debugger to do full source-code debugging of your Javascript code.

Creating native services

Anyone who has developed software for the QNX Neutrino OS knows that we offer the QNX Momentics Tool Suite for creating and testing C and C++ applications. With the QNX CAR 2 application platform, this is still the case. Native-level services are built with the QNX Momentics suite, and HTML5 applications are built with our new HTML5 SDK. We've decided to offer the suite and the SDK as separate packages so that app developers who need to work only in the HTML5 domain needn't worry about the QNX Momentics Tool Suite and vice versa. Together, these toolkits allow you to create HTML5 user interface components with underlying native services, where required.